Introduction: Quantum Computing

Quantum computing has quietly moved from the margins of physics textbooks into the core syllabus of many competitive examinations. From civil services and engineering entrance tests to management and research-oriented assessments, questions about qubits, superposition, and quantum supremacy now appear with increasing regularity.

This rise has also produced confusion.

Public discussion often swings between two extremes: quantum computers are portrayed either as magical machines that will instantly break the internet, or as purely theoretical toys with no real-world relevance. Neither view helps students preparing for exams that demand conceptual clarity rather than hype.

For aspirants facing Quantitative Exams and science-based papers, the challenge is not memorizing jargon, but understanding what quantum computing actually is, what it is not, and why many simplified explanations fall short. This article aims to correct common myths, explain the underlying ideas in plain language, and restore nuance to a topic that is often oversold or misunderstood.

What follows is not a technical deep dive for specialists, but a careful explainer designed to align with how exam questions are framed — grounded in physics, cautious in claims, and clear about limits.

Understanding Quantum Computing: The Core Idea

What Makes a Computer “Quantum”?

At its foundation, quantum computing applies principles of quantum mechanics to information processing.

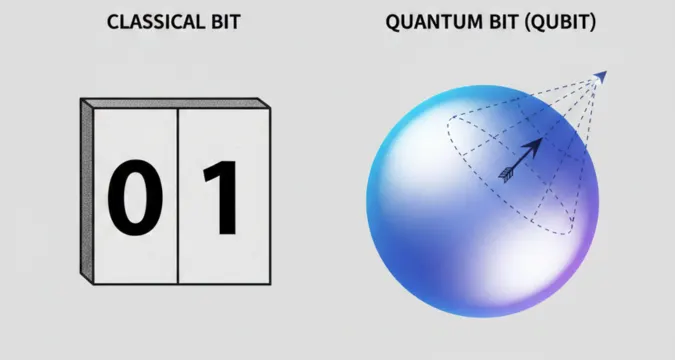

Classical computers use bits, which exist in one of two states:

- 0

- 1

Quantum computers use quantum bits, or qubits. A qubit can exist in:

- State 0

- State 1

- Or a quantum combination of both at the same time

This difference is the source of both quantum computing’s promise and its frequent misinterpretation.

Key Concepts Explained Clearly

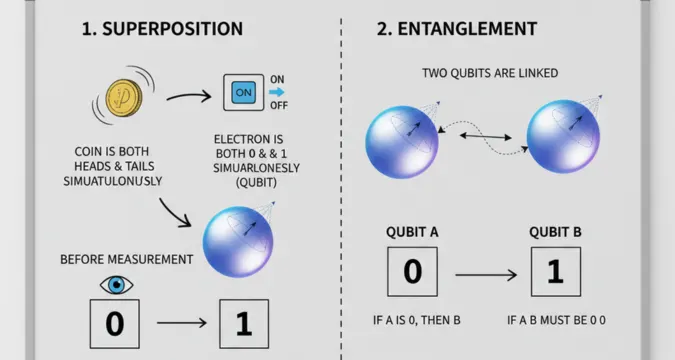

Superposition

Superposition means a qubit can represent multiple possibilities simultaneously until it is measured.

A common oversimplification says:

“A qubit is 0 and 1 at the same time.”

The more accurate explanation is:

A qubit exists in a probability-weighted combination of states, which collapses to a definite value only when observed.

For exams, the emphasis is on:

- Probability amplitudes

- Measurement collapsing the state

- Difference from classical uncertainty

Entanglement

Entanglement links qubits such that the state of one immediately correlates with the state of another, regardless of distance.

This does not mean:

- Faster-than-light communication

- Information transfer without signals

Instead, entanglement reflects correlated outcomes governed by shared quantum states.

Quantum Interference

Quantum algorithms work by amplifying correct outcomes and cancelling incorrect ones through interference. This is why quantum speedups apply only to specific problem types.

Why Quantum Computing Enters Exam Syllabi

Conceptual Value Over Practical Deployment

Exam boards include quantum computing because it:

- Tests understanding of modern physics

- Connects theory with emerging technology

- Evaluates reasoning, not rote learning

Most questions focus on:

- Conceptual differences from classical computing

- Definitions and implications

- Limits and challenges

Rarely do exams expect:

- Hardware-level details

- Mathematical derivations beyond basics

- Knowledge of current commercial products

This distinction is often lost in popular media coverage.

Broader Background: From Physics to Computation

Historical Roots

Quantum computing did not emerge from computer science alone.

Key milestones include:

- Max Planck’s quantum hypothesis (1900)

- Development of quantum mechanics in the 1920s

- Richard Feynman’s proposal (1980s) that quantum systems require quantum computers to simulate efficiently

The field gained momentum when researchers realized that classical computers struggle to simulate quantum behavior as systems grow in complexity.

Why Classical Computers Fall Short

Classical simulation of quantum systems requires tracking exponentially growing variables. Quantum computers avoid this by naturally operating under the same physical laws they simulate.

This insight matters in exams because it explains:

- Why quantum computing exists

- What problems it is suited for

Current Developments Without the Hype

What Has Actually Changed Recently

Quantum computing progress is incremental, not revolutionary.

Key developments include:

- Improved qubit stability (coherence time)

- Better error-correction techniques

- Small-scale demonstrations of quantum advantage for narrow tasks

However:

- No general-purpose quantum computer exists

- Most systems remain experimental

- Scaling remains a major challenge

This reality contrasts sharply with claims that quantum computers will soon replace classical systems.

Drivers of Research Interest

Interest is driven by:

- Cryptography concerns

- Drug discovery simulations

- Optimization problems

- Materials science

For exams, it is important to recognize that:

Quantum computers complement classical computers; they do not replace them.

Implications: Why This Matters for Students and Society

Exam Relevance

For students, misunderstanding quantum computing leads to:

- Overgeneralized answers

- Misuse of terminology

- Confusion between potential and reality

Examiners often reward:

- Clear distinctions

- Balanced explanations

- Awareness of limitations

Broader Societal Impact

Quantum computing’s real significance lies in:

- Long-term research investment

- Strategic technology planning

- Scientific capability building

It does not currently threaten:

- Personal data security overnight

- Existing computing infrastructure

Understanding this balance is essential for informed discussion.

Common Myths and Oversimplified Narratives

Myth 1: Quantum Computers Are Faster at Everything

Reality:

Quantum computers outperform classical ones only for specific problems, such as:

- Integer factorization (Shor’s algorithm)

- Certain search tasks (Grover’s algorithm)

For tasks like:

- Word processing

- Web browsing

- Basic calculations

Classical computers remain superior.

Myth 2: Superposition Means Infinite Parallel Computation

Reality:

While superposition allows multiple states, measurement yields only one result. Quantum algorithms must carefully manipulate probabilities to extract useful answers.

This is why:

- Not all problems benefit

- Algorithm design is crucial

Myth 3: Quantum Computers Will Instantly Break Encryption

Reality:

Most encryption today would require:

- Millions of stable, error-corrected qubits

- Long coherence times

- Advanced fault tolerance

Current machines are far from this threshold.

Exams often test this nuance explicitly.

Myth 4: Quantum Entanglement Allows Instant Communication

Reality:

Entanglement creates correlation, not communication. Information still requires classical channels to be transmitted.

This distinction is a frequent exam trap.

Myth 5: Quantum Computing Is Purely Theoretical

Reality:

While practical deployment is limited, quantum computing is:

- Experimentally validated

- Actively researched

- Already influencing cryptography planning

It exists between theory and application.

Where Public Discussion Loses Nuance

Media Simplification

Headlines favor dramatic claims:

- “Quantum supremacy achieved”

- “End of classical computing”

Such phrases obscure:

- Narrow experimental conditions

- Lack of scalability

- Context-specific results

Educational Shortcuts

Some preparatory materials:

- Reduce concepts to slogans

- Ignore limitations

- Conflate physics with marketing language

This creates exam answers that sound impressive but lack precision.

A related analysis on emerging technologies and public misunderstanding was previously explored in The Vue Times’ coverage of science communication gaps in advanced computing narratives, highlighting how complexity is often sacrificed for speed.

What to Watch Next

Technological Signals

Students and readers should monitor:

- Advances in error correction

- Hybrid classical-quantum models

- Post-quantum cryptography standards

These areas are more likely to appear in exams than speculative breakthroughs.

Exam-Oriented Takeaways

Expect future questions to focus on:

- Comparative advantages

- Ethical and security implications

- Realistic timelines

Balanced answers will increasingly be rewarded.

Key Takeaways for Clear Understanding

- Quantum computing applies quantum mechanics to computation, not magic.

- It excels at specific problems, not general tasks.

- Many myths arise from oversimplification and media hype.

- Exams prioritize conceptual clarity, limitations, and definitions.

- Understanding nuance matters more than memorizing buzzwords.

A grounded perspective not only improves exam performance but also fosters scientific literacy.

Frequently Asked Questions

Why is quantum computing important for competitive exams now?

Exam bodies increasingly test awareness of emerging technologies that shape future policy, science, and industry. Quantum computing represents a convergence of physics and computation, making it ideal for assessing conceptual reasoning rather than technical skill.

Will quantum computing replace classical computers in the future?

No. Quantum computers are designed to work alongside classical systems, handling specialized tasks. Classical computers remain more efficient for everyday operations and will continue to dominate general computing.

What is the most misunderstood concept students struggle with?

Superposition is often misunderstood as “doing all calculations at once.” In reality, it involves probability distributions that require careful manipulation to extract meaningful results.

How should students frame answers about quantum advantages?

Answers should specify which problems benefit, why classical systems struggle, and what limitations exist. Vague claims about speed or power usually score poorly.

Is quantum computing more physics or computer science?

It is inherently interdisciplinary. Exams generally treat it as applied physics with computational implications, focusing on principles rather than programming or hardware engineering.

Published by The Vue Times; where clarity matters more than headlines.